Why Didn't Stanley Kubrick & Arthur C. Clarke Predict The iPad?

Or, why does everyone in 2001: A Space Odyssey use a clipboard?

I don’t remember how old I was when I first watched 2001. I don’t remember the year, or where. What I do remember is the vivid passion on my father’s face as he unpacked Kubrick’s genius for my much-too-young self.

These people in the 1960s (i.e., his childhood) had predicted much of the world that was to come. They reached far beyond their present and grasped the threads of the world that was to be. And in my father’s eyes, that was true achievement.1

Naturally, as I grew older, I rebelled by watching more 2001. In middle school, I wrote a frame-by-frame analysis of the movie for a report. My arguments were so exhaustively exhausting2 that I was forever known as “that girl” by my teachers.3

As I’ve gotten older, my father’s ideas have been transmuted into an obsession with modeling the future, predictions, and the history of The Future. I am obsessed with the mechanics of making predictions. How did people go about modeling the future? What assumptions did they discard on their way to their conclusions? And why do some people come so close yet remain so frustratingly far off the mark?

Or, in other words, how did Kubrick and Clarke come so close and (yet) remain so far from predicting the iPad?

2001: A Case Study

2001: A Space Odyssey offers a great case study and example of the non-intuitive ways in which predictions miss their target. In the movie, most of predictions are surprisingly on point.

Dr Heywood Floyd watches a movie/TV on his flight to the Low-Earth Orbit space station on a seatback display,

Glass cockpits are everywhere. As far as the eye can see. Showing telemetry, guidance and math (??)

I was struck by how similar they are to the dashboard on SpaceX’s Dragon capsule,4

When Dr. Floyd arrives at the space station, he goes through an automated immigration kiosk with biometric authentication,

He pays for a video call via a very modern-looking credit card,

When Dr. Floyd travels to the Tycho Magnetic Anomaly - 1 (TMA-1) via shuttle, its pilot uses augmented reality guidance on a screen to land in the lunar night,

In the shuttle, he eats artificial, or synthetic, meat — “What's that? Chicken?” “Something like that. Tastes the same anyway.”

On the surface, in front of the TMA-1, one of the crew takes videos and pictures with a cuboidal-ish camera that reminds me of the RED V-Raptor, with a specialized housing, lens and display attachment,

When we fast forward to life on Discovery One, one of the most iconic sequences in the movie shows HAL playing chess with Frank,

and winning,5

It is astonishing how much they got right.

2001 is filled with predictions that have panned out, but few are as famous as the “iPad scene.” It is the second most famous prediction in the movie. The first is HAL.

Samsung even cited it in a court case against Apple as prior art. Samsung’s lawyers contended that Kubrick and Clarke predicted the iPad,

As much as I would like Samsung’s lawyers to be right, they’re sadly wrong. Because as impressive as that scene is, it is immediately followed up by the astronauts using paper with ever-present clipboards for their day-to-day work,

Within the movie, paper is everywhere and their portable TVs are, sadly, just that - TVs. It’s striking how everything in 2001’s universe still runs on paper, as implied by the 0g pen,

The newspapers,

The folio Dr Floyd carries with him on his way to the moon,

The briefing materials that are used during Dr. Floyd’s lunar meeting,

It seems to have never occurred to I.J. Good, Marvin Minsky, Arthur C. Clarke, Stanley Kubrick, and over fifty technical advisory organizations, including NASA of the ‘60s, that a portable TV would necessarily imply portable computers.

Portable computers would then, in turn, imply the existence of radically new forms of information capture. Technologies that would be far more comfortable to use in space than pen-and-paper,

Why didn’t they see this coming? The scientists and engineers involved in creating the world of 2001 were some of the smartest, most technically proficient people of the time. They were at the cutting edge. They were making the cutting edge. Why didn’t they connect the dots?

I’ve subjected many people to my rants on this topic, and some have pointed out that it might not be a fair question to ask. Did they have the dots in the first place? Could they have connected them at all?

Could Kubrick & Clarke have predicted the iPad?

Yes.

Kubrick and Clarke weren’t lacking in contemporaneous examples that could have helped them to make the leap. They were lacking in insight. Insight that, we can say, with the benefit of hindsight, was within arm’s reach the entire time. The fundamental components of what would become the iPad were being actively explored by the organizations Kubrick and Clarke consulted with.

One of their closest collaborators, Marvin Minsky, had all the pieces, but neither he nor his collaborators were able to put them together.

In 1963, Ivan Sutherland, a student of Marvin Minsky, finished building the first CAD system that served as one of the genesis points of Graphical User Interfaces and object-oriented programming.

In his system, humans would collaborate with machines (the aid part in Computer Aided Design) as partners in the design process. The computer wouldn’t just offer a way to “undo” your work, but it would allow you to manipulate the state, relationships, and topology of your designs. It was a work of genius. He called it Sketchpad,

Sutherland grasped that computers could be better than just paper. He demonstrated the ability to undo mistakes, transform pre-existing drawings, use a primitive form of version control and more. In his paper, he emphasized that computers were more than just a new kind of slate; they were design partners-in-waiting,

As we have seen in the introductory example, drawing with the Sketchpad system is different from drawing with an ordinary pencil and paper. Most important of all, the Sketchpad drawing itself is entirely different from the trail of carbon left on a piece of paper. Information about how the drawing is tied together is stored in the computer as well as the information which gives, the drawing its particular appearance. Since the drawing is tied together, it will keep a useful appearance even when parts of it are moved. For example, when we moved the corners of the hexagon onto the circle, the lines next to each corner were automatically moved so that the closed topology of the hexagon was preserved. Again, since we indicated that the corners of the hexagon were to lie on the circle they remained on the circle throughout our further manipulations.

It is this ability to store information relating the parts of a drawing to each other that makes Sketchpad most useful.

— Ivan Sutherland, "Sketchpad: A man-machine graphical communication system," 1963.

Sutherland first published his paper in January, 1963.

2001 started filming in 1964.

Sketchpad drew from earlier pen-based graphical interfaces. Interfaces that Marvin Minsky played with and cut his teeth on. When he was a student, the TX-0, the original font of hacker culture, could use a light pen as an input device. And the people at MIT played video games with it. Minsky himself might have played these games,

Minsky was immersed in the soup that created modern computing because his teachers and mentors built much of it. To quote Minsky,

I had a great teacher in college, Joe Licklider. Licklider and Miller were assistant professors when I was an undergraduate. And we did a lot of little experiments together. And then about 1962, I think, Licklider went to Washington to start a new advanced research project that was called ARPA.

— Marvin Minsky, MIT oral history interview for the InfinteMIT Project.

While Licklider was at MIT, teaching Minsky, he was also a human factors expert for SAGE, or the Semi-Automatic Ground Environment. A hyper-ambitious project to bring together RADAR data from across the US with air-traffic control data and military flight data to detect overflight by the USSR.

SAGE is typically remembered as the first large-scale computer network, but it was also the first widespread deployment of real-time compute. People around the country would interact with SAGE’s computers via consoles that used “light guns” to point and interact with digital objects on the display,

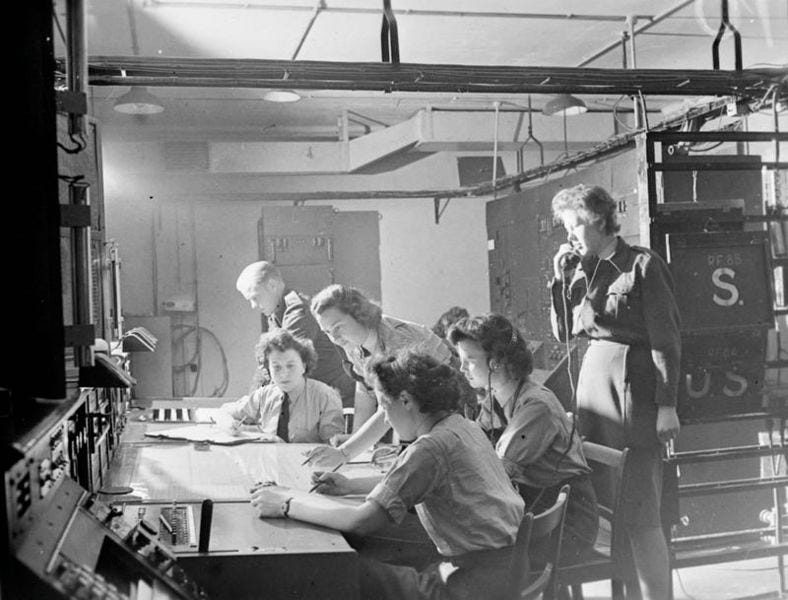

SAGE significantly automated what used to be an intensely manual and paper-based task. The earliest defensive RADAR systems, like Chain Home, relied on paper to pass around information;

SAGE took the operations performed by those people on paper and directly translated them onto the screen while reducing the number of people required. Was it a direct one-to-one translation? No, but it was pretty close.

Minsky grew up within arm’s reach of the seeds that grew into the iPad and the (eventual) obsession with going “paperless.” He was arguably one of the very few people in the world who could have corrected Kubrick and Clarke when they wrote the iconic scene of Dave showing HAL his sketches on paper. But he didn’t. Why?

Why do we have a gap between 2001’s adept predictions about computing, screen technology, and AI versus this inability to put two-and-two-together and augur the inevitable end of ubiquitous paper?

What did Kubrick & Clarke get right and why? A story about flat screens.

Before we get there, it’s worth examining the inverse, i.e., unlikely predictions they did get right.

Kubrick and Clarke successfully predicted a closely related technology — flat screens. For screens, in 2001, the world was flat. Be it at large lunar bases,

Or, spaceships heading into the unknown,

They nailed it. That’s impressive. And it should be impressive.

We take the existence of flat screens for granted, but when Kubrick and Clarke were making 2001, there weren’t any in existence.6 The very first practical flat screen display (which was also a touchscreen) was invented sometime in 1964 by Donald Bitzer for PLATO and it wasn’t deployed until the late 1960s — i.e., after Kubrick and Clarke had begun writing the novel and film.7

He was also outside of Kubrick and Clarke’s sphere of influence, so it’s doubtful that he was the source of their flatscreen dreams.

So where did the dream come from? A reasonable guess is RCA, the Radio Corporation of America. The Google of its time.

In the 1960s, for more than a decade, the RCA had doggedly pursued the end of Cathode Ray Tube (CRT) technology. RCA's president and chairman, David Sarnoff, wanted the company to pursue the creation of "optical amplifiers," likening them to the invention of the loudspeaker,

Now I should like to have you invent an electronic amplifier of light that will do for television what the amplifier of sound does for radio broadcasting. Such an amplifier of light would provide brighter pictures for television that could be projected in the home or the theater on a screen of any desired size. An amplifier of sound gave radio a 'loudspeaker,' and an amplifier of light would give television a “big-looker.”

— David Sarnoff, RCA President, September 27th, 1951

Three years later, RCA researchers debuted a flat-panel light amplifier that consisted of an electroluminescent phosphor segment combined with a Cadmium Sulfide (CdS) photoconductor; Sarnoff attended the unveiling,

By this point in time, an RCA engineer, Jan Rajchman, had summarized RCA’s new flat-panel dreams in an internally circulated memo,

For a long time there has been a desire to produce a ‘Mural Television Display’ which would be a large flat box-like device with a large rectangular face and relatively small thickness. This then could be used on the wall of the viewing room.

— Jan Rajchman, Internal RCA memo, 1952

By 1955, they had made rough, pixelated flat-panel TVs that offered — to them — a glimpse of the future, their mural TV that would eventually hang on a wall,

These were big, unwieldy lab prototypes, but they were prototypes of a future beyond CRTs. A future RCA broadly publicized via the press from one of RCA’s patents,

I don’t know if Kubrick followed these developments closely, but it’s very likely he did. These predictions were “in the air.” RCA had made sure of it. Just as you know about Google’s AI projects or Google X etc.

Knowing the above, it shouldn’t be surprising to us that 2001 followed RCA’s trail of logic and shrunk its hangs-on-the-wall TV even further to put it on a table. It was the next logical step.

Nor should it be surprising that 2001 has 0 CRTs; their elimination was — for them — another logical step.

What should be surprising, and what remains surprising to me, is that no one thought about writing on a screen, given that they were already “writing” (or drawing) on CRTs.

In their brilliance, they saw how A would directly lead to B ("Now that we can build useful flat displays, it means that someday there will be no more thick CRTs!"), but they couldn't predict how A and B would radically reshape their environments.

Or, "if we can put TVs on a table as if they were pieces of paper, would that mean that paper will become a TV?"

So... Why didn’t they?

Most pieces I’ve read on failed predictions usually assume that the correct prediction didn’t occur to the predictors. I don’t think that’s the case with Kubrick and Clarke. It doesn’t give them or their team enough credit. It’s also a very boring way of looking at the world.

I want to make the argument that they were too skilled not to. But they were (sadly) too smart to embrace the result.

While in the movie, the iPad-like device is just a movie, in the novel, it is so much more;

When he tired of official reports and memoranda and minutes, he would plug his foolscap-sized Newspad into the ship's information circuit and scan the latest reports from Earth. One by one he would conjure up the world's major electronic papers; he knew the codes of the more important ones by heart, and had no need to consult the list on the back of his pad. Switching to the display unit's short-term memory, he would hold the front page while he quickly searched the headlines and noted the items that interested him.

Each had its own two-digit reference; when he punched that, the postage-stamp-sized rectangle would expand until it neatly filled the screen and he could read it with comfort. When he had finished, he would flash back to the complete page and select a new subject for detailed examination.

Floyd sometimes wondered if the Newspad, and the fantastic technology behind it, was the last word in man's quest for perfect communications. Here he was, far out in space, speeding away from Earth at thousands of miles an hour, yet in a few milliseconds he could see the headlines of any newspaper he pleased. (That very word "newspaper," of course, was an anachronistic hangover into the age of electronics.) The text was updated automatically on every hour; even if one read only the English versions, one could spend an entire lifetime doing nothing but absorbing the ever-changing flow of information from the news satellites.

It was hard to imagine how the system could be improved or made more convenient. But sooner or later, Floyd guessed, it would pass away, to be replaced by something as unimaginable as the Newspad itself would have been to Caxton or Gutenberg.

They were so close; why didn’t they embrace their true potential?

A good friend of mine, Dave, suggested the following possibility, they ruled it out because of good technical sense. The movie reflects the prevailing mode of computing at the time — a centralized large computer (HAL in the spaceship) with users getting access piecemeal via dumb terminals or displays.

It was hard for them to imagine another reality. The technical marvel that was SAGE relied on a computer called AN/FSQ-7 — or Q7 for short, that weighed 250 tons, took up 22,000 sq.ft., and had a floor of a bunker dedicated to it; another two were dedicated to the user-interface;

A view of the floor plans obtained from a digitised IBM manual,

Transistors had made computers like the Q7 smaller, but it wasn’t obvious at the time just how powerful miniaturization was going to be. Integrated circuits had debuted less than half-a-decade ago, and they just weren’t very good. The defining technology of our age looked like a dud in the 1960s.

If an internet comment section had been around during the debut of the IC, they would have pointed out that decades of research had conclusively shown that nichrome was the best material to make resistors with, mylar for capacitors. Silicon? Bah, humbug.

And they would have been right.

To quote Jack Kilby himself, “These objections were difficult to overcome, [..] because they were all true.” It also faced headwinds from the industry. Consequently, the vast majority of the industry didn’t take one of the defining inventions of the century — arguably human history — seriously.

As late as the spring of 1963, most manufacturers believed that integrated circuits would not be commercially viable for some time, telling visitors to their booths at an industry trade show that “these items would remain on the R&D level until a breakthrough occurs in technology and until designs are vastly perfected.”

— Leslie Berlin. “The Man Behind the Microchip” (p. 136) quoting Richard Gessell, “Integrated Circuitry Held Far From Payoff,” Electronic News, 27 March 1963.

No one lavished magazine pages on it. No one wrote much about it, if at all, except to criticise it and the absurd Apollo Program’s boneheaded decision to use it to make a guidance computer instead of a sensible solution like transistors. In 1959, according to the technical press of the day, the real breakthroughs were gallium arsenide diodes and backward-wave oscillators.

No one took it seriously other than a few companies across the US and Eldon C. Hall.

Standing in the mid-1960s, it would have been fantastical to suggest that this technology would eventually keep miniaturizing; offering better performance for cheaper all while doing pre-existing operations faster every time the feature size shrank. And yet, that is what occurred.

Our reality has ended up far more miraculous than anything Kubrick and Clarke could have contrived. We have stagnated in areas where they thought we would prosper. We have prospered where they believed anything but stagnation was impossible.

They couldn’t imagine the iPad because they couldn’t re-imagine their constraints.

Tablets don’t make sense in a mainframe environment. There’s something a bit like a light-pen in 2001; it’s shown in the scene when Dave, Frank and HAL are troubleshooting a circuit, but it’s tethered to a workstation. Sensibly so.

From their perspective, a portable tablet-like device would have been absurd. As a dumb terminal, it would have to constantly, wirelessly poll the mainframe while simulating writing on paper. Why would you do that when you could just write on paper? Why would you waste the bandwidth and resources necessary to perform these constant operations when a paper would offer better fidelity for cheaper?

It’s a compelling argument. Some people still make it today.

On the other hand, portable media consumption would have made a lot more sense to them — transistor radios were a runaway success and were an early indication of what was to come. And they weren’t wrong in this regard. The vast majority of tablet users use their portable devices to consume rather than produce. Most of the time. But the possibility that the device could be more than that eluded them. They just couldn’t see outside that narrow use case and imagine what portable computing would enable.

The technology required to shrink computers just wasn’t there and the one touted as the pathway towards it wasn’t credible and was restricted to expensive, limited use cases — or, so their advisors must have told them. And being the clever, well-educated people they were, Kubrick and Clarke believed them.

Which is why they came so close and yet remained so frustratingly far from predicting the iPad.

My father would often talk about achievement in the sense of goals that were “pure” i.e. things that couldn’t be bought or lucked into, but rather were your own. I would quibble with the definition (what counted as such varied), but I do respect what he was doing. His particular mix of work ethic and ambition is something that could serve many of us well.

Similar to the screenshots here, I printed out each time a particular type of technology was used, wrote up a bit of their history. I took pains arguing that in each case, from PanAm to Ma Bell, companies were unable to capitalise on their R&D (I had watched Pirates of Silicon Valley by this point).

I had also read HAL’s Legacy (someone at MIT had posted the book online in its entirety. There’s your metaphor for the future) and borrowed arguments about how uneven progress has been, to quote Dr. David Kuck,

”Computer scientists and artificial intelligence (AI) specialists have failed to fulfill many aspects of the Clarke-Kubrick vision. They have not created computer systems that can mimic the behavior of the human brain; nor have they been able to build systems that can learn to imitate many human skills. However, in the past thirty years, systems have been created that far exceed human capabilities in many more areas than were anticipated in the mid-1960s.”

Computers are amazing. Our expectations keep surpassing their wonderful capabilities.

I also made the argument that they missed the social changes unfolding right in front of them. Technology is easier to predict than society, I guess.

Pioneers are seldom appreciated in their time.

Minus the joystick. I would like to go on the record expressing my reservations about an all automated, all glass cockpit capsule design.

Chess, alongside NLP, was one of the earliest AI frontiers. Konrad Zuse tried to program the Z3 to play chess. Alan Turing tried his hand at it too. Stanislaw Ulam built one at post-war Los Alamos. So did John McCarthy. Most early AI researchers were obsessed with chess. Some believed that it was a means of achieving and measuring intelligence. At the time, the idea that computers could (eventually) eclipse humans in chess was rank heresy outside of the field. In that view, HAL playing and winning in a game against an intelligent person, an astronaut of all people, was a big deal.

It can be argued that my statement is incorrect. RCA had the electroluminescent lab prototype that Jan Rajchman made, but it wasn’t a screen per se. The one-off demonstration mural had 1,200 electroluminescent 1.2cm x 1.2cm (roughly) pixels in a 30x40 grid. I have not counted it as a functional screen because it wasn’t a monolithic screen; even though it was explicitly designed as a mosaic of several smaller units.

Yes, it was a very crude macro-version of what came later. But I am drawing an arbitrary boundary and saying that it wasn’t a screen per se.

From an oral history interview with Donald Bitzer by the Computer History Museum,

The biggest panel we built was a 16-by-16, which was early on. Which was good enough we could carry it around, write things on it, the characters and letters, and show people how it erases. We put it in a little suitcase. And then we started working with companies. And Owens-Illinois Glass Company was the first one. [..] They said they heard about this. They liked to take a look at it. And so they decided, said, "This is a glass product. We ought to be doing it." [..] And they did, and they did a good job. †

They had some nice changes in things that made things better. And they produced panels up to 512-by-512. [..] I think it took that long for them to get everything done, [… but] they worked on it pretty hard. And it might have taken two years of time.

There's a lot to do to be sure you can manufacture them and guarantee them for a long life. And they did that. First of all, testing had to be advanced testing, because you wanted these panels to last for ten, fifteen, twenty years. And you couldn’t test them in real time. You had to do aggressive testing of some kind. And they did that, so they did a lot of work that we would have had to do. We didn’t build our own in the laboratory, larger panels, until later on…

— Donald Bitzer, Computer History Museum Oral History Interview Transcript, 2022

† The way these engineers convinced their managers was hilarious, “And the way they decided to do it was to the management was they took in what they had to do to make plasma panels and showed them. And they showed them what this thing they called this iconoscope tube that they were then making, the <inaudible 01:48:19> cathode ray tube that introduced the shadow mass tubes. And they told them what that took.

And when they got done, the management said, ‘Don't touch those.’ They said, ‘They're too hard. Go work on the other one.’ That's how they got permission to do this.”